Written on 10 December 2015

Tools to help clean big data

You'd be forgiven for thinking that in this day and age, extracting useful information from data provided by the supermarkets would be a simple task. The data provided by the "Big Four" supermarkets in the UK; Sainsburys, Asda, Tesco and Waitrose doesn't contain UPCs or EANs. There are countless spelling mistakes and variations on spellings even down to the brand level. Different character sets are used and supermarkets will even change the way they name products from week to week. To combat all of these things and more, we have developed a number of different tools to help make the process as easy as possible.

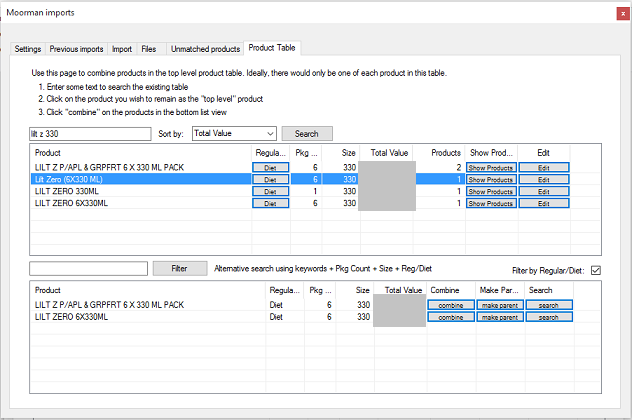

A Brief Overview of the Product Matching tool

This human element is most important in identifying duplicate products and classifying them as the same. With the huge amount of possible variations in spelling, only a human can come to the conclusion, with a good degree of certainty that

Lilt zero 6x330ml

is the same as

Lilt z p/apl & grpfrt 6 x 330 ml pack

The computer's job is to make the human's job as simple as possible. The image below shows the other possible duplicates of Lilt Zero (6X330 ML) in the bottom list. If they are the same product then the user clicks "combine" or "make parent" to identify a single value to report on.

using computers to help humans is the only way to make sense of this data

When the user clicks on "Lilt Zero (6x330ml)", the application automatically searches the product table for possible matches and returns them based on the description. The results are weighted according to the position of the words and ordered accordingly. This single screen is aso where the user can specify parent/child products, view existing matches and rollback changes as well as change all metrics apart from the product description (because the same product description is likely to be sent from the supermarkets in the future). Keeping the layout simple and unclutered is important for the human user and belies the complexity of the underlying functionality.

One man's trash is another man's treasure

Most people out there groan when they have to think about making sense of the masses of data they collect, we revel in it. Maybe it's because we like to tut at other people's mistakes and roll our eyes at flawed processes that generated the "mess" in the first place. Maybe it's because we like to see a picture emerge from that seemingly impossible jigsaw. Whatever it is, we've had one of the most difficult puzzles to date trying to make sense of data from the "Big Four".

On of the major stumbling blocks we experienced is that the data supplied by the supermarkets doesn't include UPCs/EANs (tut-tut). That means we need to match by product descriptions which, as you can imagine, vary not only between the supermarkets but from week to week. There are also lots of variations between products in the same range from the same supermarket and plenty of spelling mistakes to boot (not to mention other mistakes in quantity, size, packaging, flavour....). Most people would give in here and quote the term GIGO (garbage in, garbage out) which was first referred to in 1963 and relates to the fact that computers, because they work by logical processes unquestioningly process unintended, even nonsensical, input data ("garbage in") and produce undesired, often nonsensical, output ("garbage out").

We're a little more in 'unpolished jewel' camp, believing that most data isn't complete garbage but human intervention is required to clean things up. You can't leave it entirely to the machines (even 50+ years after Charles Babbage's musings) but the machines can help make the process of cleaning it up a heck of a lot easier.

We've ended up developing tools to help make the process as simple as possible whilst providing the ability for users to undo their changes as well as track them, report on them and even create their own rules.

Fortnightly updates

Even when the products have been cleaned there is still the risk of importing more errant data when the new supermarket data comes through. Every two weeks the clients imports supermarket data and goes through this same process:

- Import the supermarket data using the tool (above).

- Rules automatically run to fix generic errors and non UTF-8 characters

- Check for unmatched products. Unmatched products are ordered by sales value by default so that the user can ascertain how important it is that they are fixed.

- Using dashboards created by Moor Consulting view possible exception data, combined data, changes and other areas that may requtitivation

- And finally, the who purpose of this excercise is to use dashboards for analysing sales of products across the entire industry.

Data management

Of course, what computers do well is store the cleaned data and our tools help manage the data that has been imported. This includes rolling back / deleting unwanted data which may, for example, have been imported in error. Users need to be able to see the batches that have previously been imported and the software needs to minimise the risk of the same data being imported again.

Updates and changes

Using RESTful web services we send rules and initiate updates to the client application without getting the user involved with installing anything. Why get humans involved when you don't need to?